OMICS Cluster

The OMICS Compute Cluster was created to support the Excellence Cluster at Lübeck University. It is mainly used to perform calculations for GWAS, NGS, in silico modelling etc.

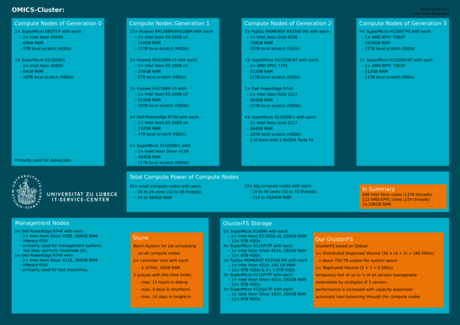

The OMICS Cluster provides a powerful computing platform for the institutes and institutions involved. In its current expansion, it offers in summary 1.600 cpu threads and about 14,3 TB of RAM. The job scheduler SLURM is used to manage and execute the batch-jobs to ensure a fair distribution of this remarkable computing power. A job can allocate and use one or multiple compute nodes.

The machines come in different designs. While some offer a particularly large number of CPU cores (up to 64 or 72 threads), our four high-memory nodes have up to 1TB of RAM. Most of our compute nodes provide 32 to 48 threads and 192 to 384GB of RAM. In addition, our computing nodes all have local hard drives in order to relieve the network file systems and to speed up the calculations. The range extends from approx. 5TB on hard drives to 15TB on SSDs.

A total of over 750 TB of permanent storage space is available in the GlusterFS system for storing project data between the computing jobs.

Steering Committee

Members:

- Prof. Dr. Hauke Busch (Lübecker Institute for Experimental Dermatology, LIED, speaker of the steering committee)

- Prof. Dr. Lars Bertram (Lübecker Interdisciplinary Platform für Genome Analysis, LIGA)

- Prof. Dr. Jeanette Erdmann (Institute for Cardiogenetics, IIEG)

- Prof. Dr. Martin Kircher (Institut für Humangenetik)

- Dipl.-Inf. Helge Illig (IT-Service-Center, ITSC)

Former members:

- Prof. Dr. Saleh Ibrahim (Lübecker Institute for Experimental Dermatology, LIED)

Forms

- User Regulation (in prepraration)

- Project Application Form

- Users Form

History of the OMICS cluster

The idea of the OMICS cluster arose in December 2014 at a first working meeting of Professors L. Bertram, J. Erdmann, S. Ibrahim and Dipl.-Inf. H. Illig. In May of the following year, 230,000 euros were invested in the first expansion stage of the OMICS cluster. This was primarily financed by the Inflammation Research Excellence Cluster. It comprised the first storage, a management node and 17 computing nodes, one of which was the Bignode with 72 CPU threads. The first full-time administrator of the cluster became Frank Rühlemann in October of the same year.

In May 2016, the cluster got its second expansion stage, which included an increase in storage capacity, a second management node and the acquisition of a few more computing nodes. Of the 230,000 euros required for this, 40,000 were contributed by LIED, the remaining 190,000 were provided by the Erdmann, Bertram and Lill working groups.

At the end of 2016, Professor Busch joined the steering group and was appointed the new spokesperson.

In January 2017, Gudrun Amedick was hired as the second administrator for the cluster.

In the course of 2017, the first three dedicated GlusterFS servers were procured in order to switch from a distributed volume with the two storages to a distributed dispersed volume (4 + 2) via independent standard servers. This should simplify future expansion and distribute the costs of replacing obsolete hardware better over time.

Starting in 2018, the new GlusterFS structure was expanded annually by three servers, each of which allows approx. 192TB of new storage space.

At the request of the user, an NFS storage with around 20TB of space was procured in 2019. This allows a higher small file performance and is therefore used as cross-node storage e.g. for R or Python packages installed by the user and smaller data sets. It thus represents a compromise between the GlusterFS system and the local hard drives of the computing nodes. In addition, the computing nodes could also be modernized with funds from Professor Busch. The two machines with 768GB RAM relieved the bignode (node24) while five small computing nodes with 32 threads and 384GB RAM were able to meet the increased memory requirements. The newly emerged AMD EPYC CPUs appeared very interesting and were tried out in two computing nodes with 32 threads and 512 GB RAM.

In 2019, the data network connection was also significantly improved.

A backup has been providing better security for the GlusterFS since 2020. For this purpose, a system with 1.6PB memory was procured, which regularly saves the data status. Three computing nodes were equipped with Tesla T4 GPUs in order to significantly accelerate certain calculations optimized for them, and these were well received. The number of computing nodes has meanwhile been increased to 41.

Organisation

Helge Illig

Gebäude 64, 034 (1.OG)

Tel. +49 451 3101 2000

helge.illig(at)uni-luebeck.de

Support

If there are questions or problems, please contact us at

Administration

Frank Rühlemann

Gebäude 64, 031 (EG)

Tel. +49 451 3101 2034

f.ruehlemann(at)uni-luebeck.de

Gudrun Amedick

Gebäude 64, 032 (EG)

Tel. +49 451 3101 2035

itsc.support-omics(at)uni-luebeck.de